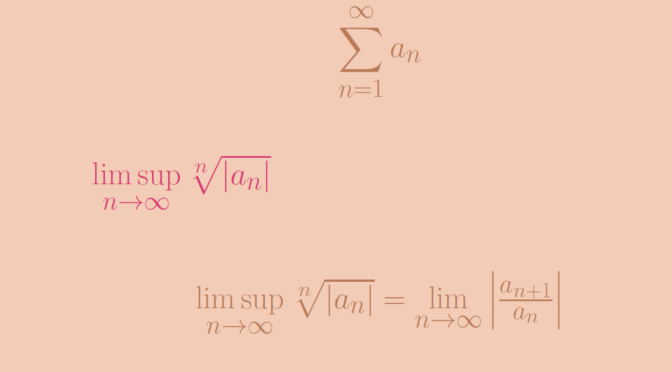

Consider a functions series \(\displaystyle \sum f_n\) of functions defined on a set \(S\) to \(\mathbb R\) or \(\mathbb C\). It is known that if \(\displaystyle \sum f_n\) is normally convergent, then \(\displaystyle \sum f_n\) is uniformly convergent.

The converse is not true and we provide two counterexamples.

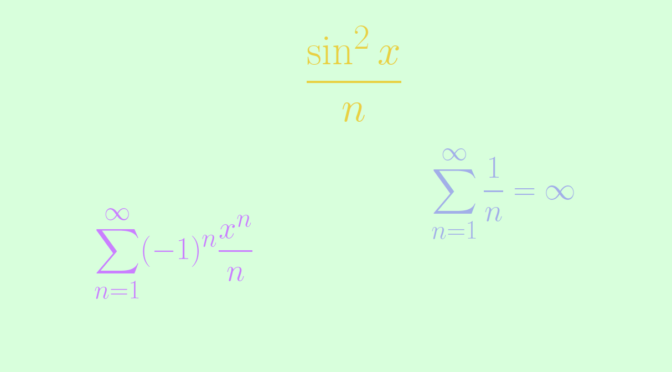

Consider first the sequence of functions \((g_n)\) defined on \(\mathbb R\) by:

\[g_n(x) = \begin{cases}

\frac{\sin^2 x}{n} & \text{for } x \in (n \pi, (n+1) \pi)\\

0 & \text{else}

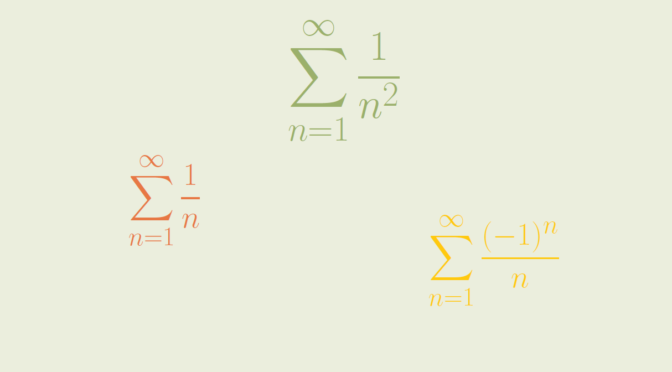

\end{cases}\] The series \(\displaystyle \sum \Vert g_n \Vert_\infty\) diverges as for all \(n \in \mathbb N\), \(\Vert g_n \Vert_\infty = \frac{1}{n}\) and the harmonic series \(\sum \frac{1}{n}\) diverges. However the series \(\displaystyle \sum g_n\) converges uniformly as for \(x \in \mathbb R\) the sum \(\displaystyle \sum g_n(x)\) is having only one term and \[

\vert R_n(x) \vert = \left\vert \sum_{k=n+1}^\infty g_k(x) \right\vert \le \frac{1}{n+1}\]

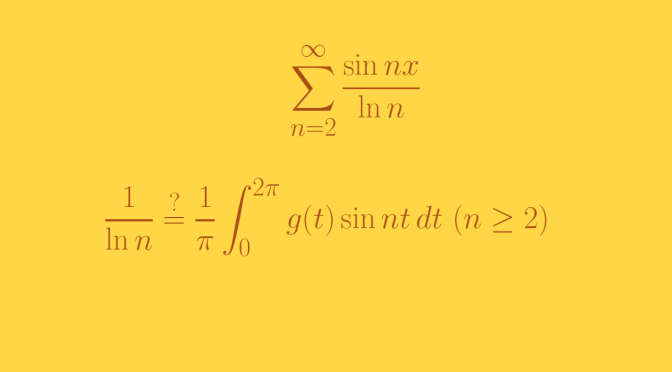

For our second example, we consider the sequence of functions \((f_n)\) defined on \([0,1]\) by \(f_n(x) = (-1)^n \frac{x^n}{n}\). For \(x \in [0,1]\) \(\displaystyle \sum (-1)^n \frac{x^n}{n}\) is an alternating series whose absolute value of the terms converge to \(0\) monotonically. According to Leibniz test, \(\displaystyle \sum (-1)^n \frac{x^n}{n}\) is well defined and we can apply the classical inequality \[

\displaystyle \left\vert \sum_{k=1}^\infty (-1)^k \frac{x^k}{k} – \sum_{k=1}^m (-1)^k \frac{x^k}{k} \right\vert \le \frac{x^{m+1}}{m+1} \le \frac{1}{m+1}\] for \(m \ge 1\). Which proves that \(\displaystyle \sum (-1)^n \frac{x^n}{n}\) converges uniformly on \([0,1]\).

However the convergence is not normal as \(\sup\limits_{x \in [0,1]} \frac{x^n}{n} = \frac{1}{n}\).