Consider for \(n \ge 2\) the linear space \(\mathcal M_n(\mathbb C)\) of complex matrices of dimension \(n \times n\). Is a matrix \(T \in \mathcal M_n(\mathbb C)\) always having a square root \(S \in \mathcal M_n(\mathbb C)\), i.e. a matrix such that \(S^2=T\)? is the question we deal with.

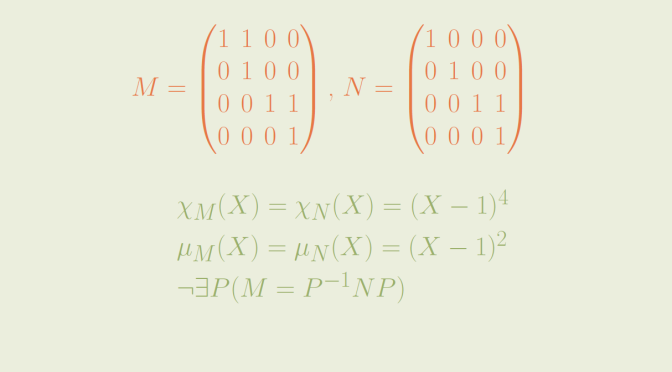

First, one can note that if \(T\) is similar to \(V\) with \(T = P^{-1} V P\) and \(V\) has a square root \(U\) then \(T\) also has a square root as \(V=U^2\) implies \(T=\left(P^{-1} U P\right)^2\).

Diagonalizable matrices

Suppose that \(T\) is similar to a diagonal matrix \[

D=\begin{bmatrix}

d_1 & 0 & \dots & 0 \\

0 & d_2 & \dots & 0 \\

\vdots & \vdots & \ddots & 0 \\

0 & 0 & \dots & d_n

\end{bmatrix}\] Any complex number has two square roots, except \(0\) which has only one. Therefore, each \(d_i\) has at least one square root \(d_i^\prime\) and the matrix \[

D^\prime=\begin{bmatrix}

d_1^\prime & 0 & \dots & 0 \\

0 & d_2^\prime & \dots & 0 \\

\vdots & \vdots & \ddots & 0 \\

0 & 0 & \dots & d_n^\prime

\end{bmatrix}\] is a square root of \(D\). Continue reading Complex matrix without a square root