In that article, I provided an example of a continuous function with divergent Fourier series. We prove here the existence of such a function using Banach-Steinhaus theorem, also called uniform boundedness principle.

Theorem (Uniform Boundedness Theorem) Let \((X, \Vert \cdot \Vert_X)\) be a Banach space and \((Y, \Vert \cdot \Vert_Y)\) be a normed vector space. Suppose that \(F\) is a set of continuous linear operators from \(X\) to \(Y\). If for all \(x \in X\) one has \[

\sup\limits_{T \in F} \Vert T(x) \Vert_Y \lt \infty\] then \[

\sup\limits_{T \in F, \ \Vert x \Vert = 1} \Vert T(x) \Vert_Y \lt \infty\]

Let’s take for \(X\) the vector space \(\mathcal C_{2 \pi}\) of continuous functions from \(\mathbb R\) to \(\mathbb C\) which are periodic with period \(2 \pi\) endowed with the norm \(\Vert f \Vert_\infty = \sup\limits_{- \pi \le t \le \pi} \vert f(t) \vert\). \((\mathcal C_{2 \pi}, \Vert \cdot \Vert_\infty)\) is a Banach space. For the vector space \(Y\), we take the complex numbers \(\mathbb C\) endowed with the modulus.

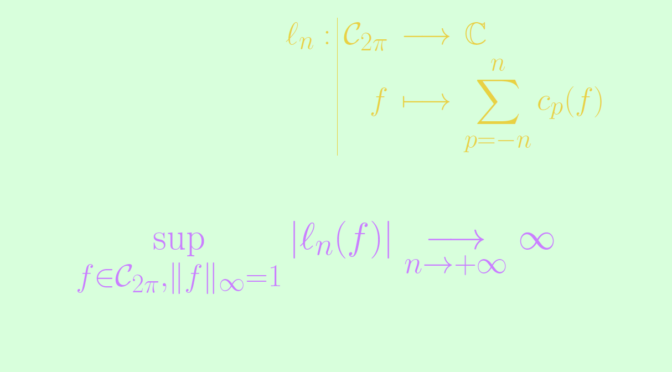

For \(n \in \mathbb N\), the map \[

\begin{array}{l|rcl}

\ell_n : & \mathcal C_{2 \pi} & \longrightarrow & \mathbb C \\

& f & \longmapsto & \displaystyle \sum_{p=-n}^n c_p(f) \end{array}\] is a linear operator, where for \(p \in \mathbb Z\), \(c_p(f)\) denotes the complex Fourier coefficient \[

c_p(f) = \frac{1}{2 \pi} \int_{- \pi}^{\pi} f(t) e^{-i p t} \ dt\]

We now prove that

\begin{align*}

\Lambda_n &= \sup\limits_{f \in \mathcal C_{2 \pi}, \Vert f \Vert_\infty=1} \vert \ell_n(f) \vert\\

&= \frac{1}{2 \pi} \int_{- \pi}^{\pi} \left\vert \frac{\sin (2n+1)\frac{t}{2}}{\sin \frac{t}{2}} \right\vert \ dt = \frac{1}{2 \pi} \int_{- \pi}^{\pi} \left\vert h_n(t) \right\vert \ dt,

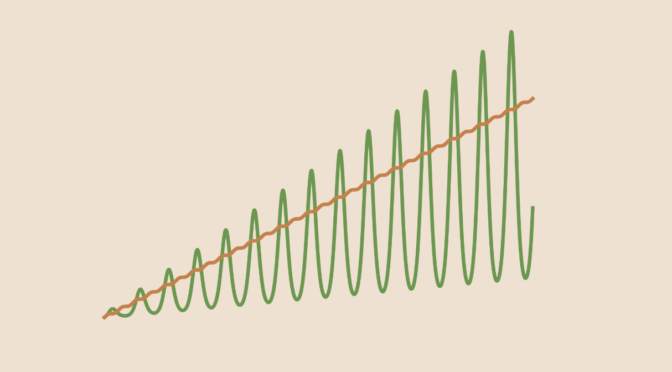

\end{align*} where one can notice that the function \[

\begin{array}{l|rcll}

h_n : & [- \pi, \pi] & \longrightarrow & \mathbb C \\

& t & \longmapsto & \frac{\sin (2n+1)\frac{t}{2}}{\sin \frac{t}{2}} &\text{for } t \neq 0\\

& 0 & \longmapsto & 2n+1

\end{array}\] is continuous.

Continue reading Existence of a continuous function with divergent Fourier series