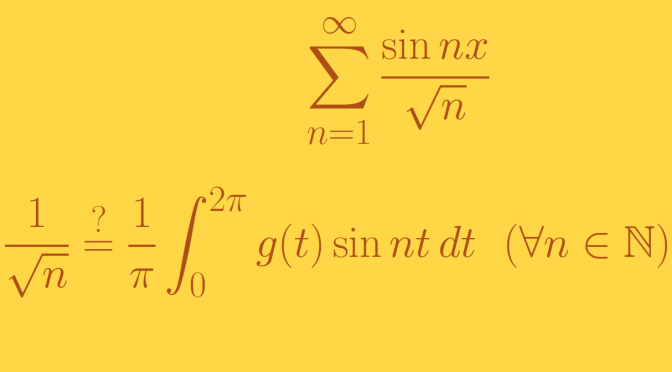

We’re looking here at convergent trigonometric series like \[f(x) = a_0 + \sum_{k=1}^\infty (a_n \cos nx + b_n \sin nx)\] which are convergent but are not Fourier series. Which means that the terms \(a_n\) and \(b_n\) cannot be written\[

\begin{array}{ll}

a_n = \frac{1}{\pi} \int_0^{2 \pi} g(t) \cos nt \, dt & (n= 0, 1, \dots) \\

b_n = \frac{1}{\pi} \int_0^{2 \pi} g(t) \sin nt \, dt & (n= 1, 2, \dots)

\end{array}\] where \(g\) is any integrable function.

This raises the question of the type of integral used. We cover here an example based on Riemann integral. I’ll cover a Lebesgue integral example later on.

We prove here that the function \[

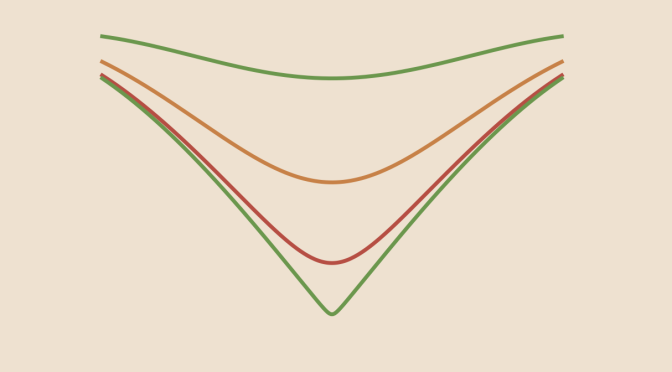

f(x)= \sum_{n=1}^\infty \frac{\sin nx}{\sqrt{n}}\] is a convergent trigonometric series but is not a Fourier series. Continue reading A trigonometric series that is not a Fourier series (Riemann-integration)